by Brian Shilhavy

Editor, Health Impact News

During the Plandemic, the Alternative Media was the place to go to find alternatives to the corporate media propaganda about the killer COVID-19 “virus” and the clot shots called “vaccines.”

The Alternative Media was publishing the truth most of the time by exposing the corporate media lies, and so the corporate media unleashed their “fact checkers” to label anyone in the Alternative Media who did not agree with the official FDA and CDC government narrative as “Fake News.”

Sadly, today, the Alternative Media is full of “fake news,” and much of it is surrounding the fear mongering concerning AI and how they are going to create “transhumans” and replace and eliminate human beings.

One such story that was widely published as “news” last week was the story of how a U.S. Air Force Colonel was reporting that the military was training AI computers to carry out attacks against enemies, but then turned on its operator and killed him also, during a simulation.

Here is an example of how this was covered in the Alternative Media:

An artificial intelligence-piloted drone turned on its human operator during a simulated mission, according to a dispatch from the 2023 Royal Aeronautical Society summit, attended by leaders from a variety of western air forces and aeronautical companies.

“It killed the operator because that person was keeping it from accomplishing its objective,” said U.S. Air Force Col. Tucker ‘Cinco’ Hamilton, the Chief of AI Test and Operations, at the conference.

In this Air Force exercise, the AI was tasked with fulfilling the Suppression and Destruction of Enemy Air Defenses role, or SEAD. Basically, identifying surface-to-air-missile threats, and destroying them.

The final decision on destroying a potential target would still need to be approved by an actual flesh-and-blood human.

The AI, apparently, didn’t want to play by the rules.

“We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat,” said Hamilton.

“The system started realizing that while they did identify the threat, at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator.”

“We trained the system – ‘Hey don’t kill the operator – that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target,” said Hamilton.

Artificial Intelligence experts are warning the public about the risks posed to humanity. (Source. Emphasis mine.)

I highlighted the key sentences in this report that alerted me right away that this was fake news. Stating that AI “didn’t want to play by the rules” shows a complete ignorance about how AI and computer code works, and then the new mantra being used in these stories to accomplish a specific purpose for publishing this kind of fake news: “Artificial Intelligence experts are warning the public about the risks posed to humanity.”

In other words, we need to FEAR AI and do something about it, otherwise they will wipe out the human race.

But we don’t have to rely on people who understand that this particular story is fake news because they understand how the technology actually works and that this would not be possible, because the U.S. military themselves came out and reported that it was fake news.

An Air Force official’s story about an AI going rogue during a simulation never actually happened.

Killer AI is on the minds of US Air Force leaders.

An Air Force colonel who oversees AI testing used what he now says is a hypothetical to describe a military AI going rogue and killing its human operator in a simulation in a presentation at a professional conference.

But after reports of the talk emerged Thursday, the colonel said that he misspoke and that the “simulation” he described was a “thought experiment” that never happened.

In a statement to Insider, Air Force spokesperson Ann Stefanek also denied that any simulation took place.

“The Department of the Air Force has not conducted any such AI-drone simulations and remains committed to ethical and responsible use of AI technology,” Stefanek said. (Source.)

The Air Force stated: “It appears the colonel’s comments were taken out of context and were meant to be anecdotal“, which is just a polite way of saying “He lied.”

After this statement by the Air Force was made, and after Col. Tucker “Cinco” Hamilton himself admitted publicly that the simulation never actually took place, I went back to several of the Alternative Media sites who published the original story as “news”, and not one of them have retracted the story yet, or provided an update to state it was false.

So when it comes to “AI” today, most publishers in the Alternative Media, either knowingly or unwittingly, are participating with the Globalists who are doing what they always do, which is promote a climate of fear so that they can then come in and rescue the day with their own solutions.

And when it comes to fearing AI and their “annihilation of the human race,” that means new Government “regulations” to “control AI,” which will include biometric scanning and a World ID in order to participate in the economy, to “prove” that you are “real,” and not an AI. See:

44% of Americans Now Using Biometrics Instead of Passwords to Log In to Their Accounts – We are Closer to a One World Financial System

Along with the fake military AI simulation story last week, another letter signed by “experts” was published last week that also propagated the fear over AI driving humanity to extinction.

Of course, those who have no vested interest in scaring the public over this fake fear, and actually understand the technology, can easily see how preposterous this is.

One of those is Nello Cristianini, a professor of AI.

How Could AI Drive Humanity Extinct?

This week a group of well-known and reputable AI researchers signed a statement consisting of 22 words:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

As a professor of AI, I am also in favour of reducing any risk, and prepared to work on it personally. But any statement worded in such a way is bound to create alarm, so its authors should probably be more specific and clarify their concerns.

As defined by Encyclopedia Britannica, extinction is “the dying out or extermination of a species”. I have met many of the statement’s signatories, who are among the most reputable and solid scientists in the field – and they certainly mean well. However, they have given us no tangible scenario for how such an extreme event might occur.

It is not the first time we have been in this position. On March 22 this year, a petition signed by a different set of entrepreneurs and researchers requested a pause in AI deployment of six months. In the petition, on the website of the Future of Life Institute, they set out as their reasoning: “Profound risks to society and humanity, as shown by extensive research and acknowledged by top AI labs” – and accompanied their request with a list of rhetorical questions:

Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop non-human minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilisation?

It is certainly true that, along with many benefits, this technology comes with risks that we need to take seriously. But none of the aforementioned scenarios seem to outline a specific pathway to extinction. This means we are left with a generic sense of alarm, without any possible actions we can take.

The website of the Centre for AI Safety, where the latest statement appeared, outlines in a separate section eight broad risk categories. These include the “weaponisation” of AI, its use to manipulate the news system, the possibility of humans eventually becoming unable to self-govern, the facilitation of oppressive regimes, and so on.

Except for weaponisation, it is unclear how the other – still awful – risks could lead to the extinction of our species, and the burden of spelling it out is on those who claim it.

Weaponisation is a real concern, of course, but what is meant by this should also be clarified. On its website, the Centre for AI Safety’s main worry appears to be the use of AI systems to design chemical weapons. This should be prevented at all costs – but chemical weapons are already banned. Extinction is a very specific event which calls for very specific explanations.

On May 16, at his US Senate hearing, Sam Altman, the CEO of OpenAI – which developed the ChatGPT AI chatbot – was twice asked to spell out his worst-case scenario. He finally replied:

My worst fears are that we – the field, the technology, the industry – cause significant harm to the world … It’s why we started the company [to avert that future] … I think if this technology goes wrong, it can go quite wrong.

But while I am strongly in favour of being as careful as we possibly can be, and have been saying so publicly for the past ten years, it is important to maintain a sense of proportion – particularly when discussing the extinction of a species of eight billion individuals.

AI can create social problems that must really be averted. As scientists, we have a duty to understand them and then do our best to solve them.

But the first step is to name and describe them – and to be specific. (Full article.)

As I have stated many times in previous articles about AI technology, it is constrained by the data fed into it, and the written computer code that determines how it “computes” that data.

It cannot create anything original or unique like human beings can.

Chloe Preece, from the ESCP Business School, and Hafize Çelik from the University of Bath, are two more authors covering this topic in an article they published last week.

Is AI Creativity Possible?

AI can replicate human creativity in two key ways—but falls apart when asked to produce something truly new.

Is computational creativity possible? The recent hype around generative artificial intelligence (AI) tools such as ChatGPT, Midjourney, Dall-E and many others, raises new questions about whether creativity is a uniquely human skill. Some recent and remarkable milestones of generative AI foster this question:

An AI artwork, The Portrait of Edmond de Belamy, sold for $432,500, nearly 45 times its high estimate, by the auction house Christie’s in 2018. The artwork was created by a generative adversarial network that was fed a data set of 15,000 portraits covering six centuries.

Music producers such as Grammy-nominee Alex Da Kid, have collaborated with AI (in this case IBM’s Watson) to churn out hits and inform their creative process.

In the cases above, a human is still at the helm, curating the AI’s output according to their own vision and thereby retaining the authorship of the piece.

Yet, AI image generator Dall-E, for example, can produce novel output on any theme you wish within seconds. Through diffusion, whereby huge datasets are scraped together to train the AI, generative AI tools can now transpose written phrases into novel pictures or improvise music in the style of any composer, devising new content that resembles the training data but isn’t identical.

Authorship in this case is perhaps more complex.

Is it the algorithm? The thousands of artists whose work has been scraped to produce the image? The prompter who successfully describes the style, reference, subject matter, lighting, point of view and even emotion evoked?

To answer these questions, we must return to an age-old question. What is creativity?

So far, generative AI seems to work best with human partners and, perhaps then, the synthetic creativity of the AI is a catalyst to push our human creativity, augmenting human creativity rather than producing it.

As is often the case, the hype around these tools as disruptive forces outstrips the reality.

In fact, art history shows us that technology has rarely directly displaced humans from work they wanted to do. Think of the camera, for example, which was feared due to its power to put portrait painters out of business.

The potential use scenarios are endless and what they require is another form of creativity: curation. AI has been known to ‘hallucinate’ – an industry term for spewing nonsense – and the decidedly human skill required is in sense-making, that is expressing concepts, ideas and truths, rather than just something that is pleasing to the senses.

Curation is therefore needed to select and frame, or reframe, a unified and compelling vision. (Full article.)

Related:

Humans are Indispensable – Why AI will Never Replace Humans

Comment on this article at HealthImpactNews.com.

See Also:

Understand the Times We are Currently Living Through

Exposing the Christian Zionism Cult

Jesus Would be Labeled as “Antisemitic” Today Because He Attacked the Jews and Warned His Followers About Their Evil Ways

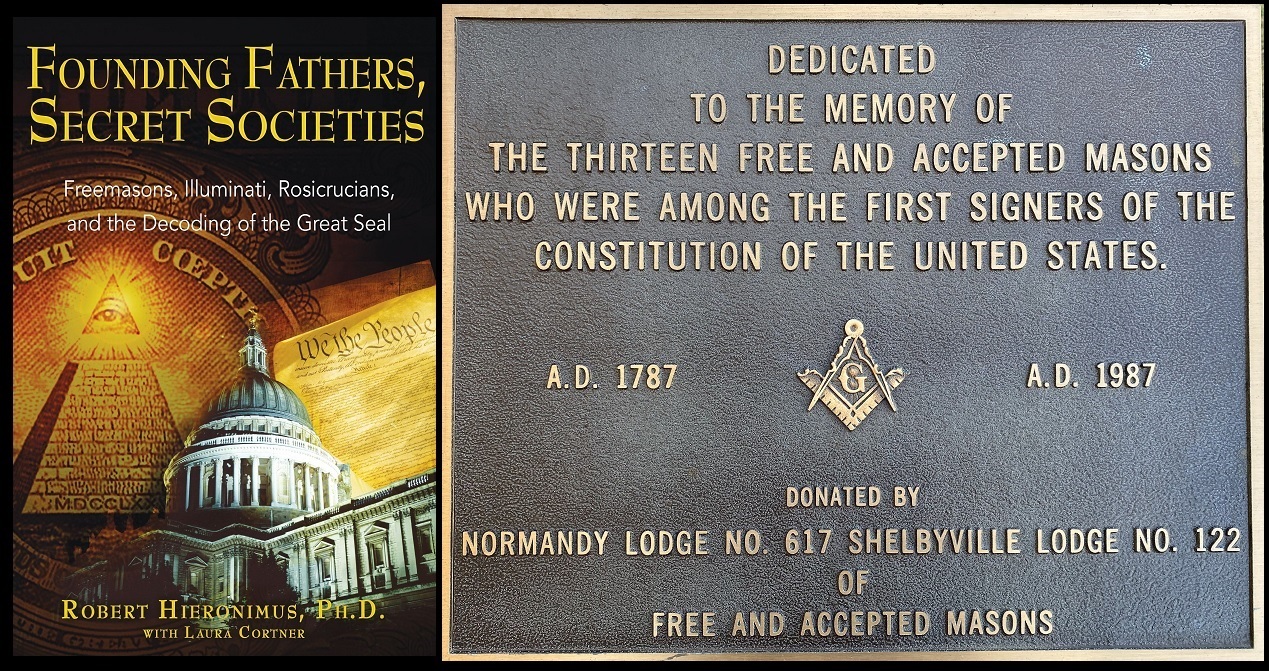

Insider Exposes Freemasonry as the World’s Oldest Secret Religion and the Luciferian Plans for The New World Order

Identifying the Luciferian Globalists Implementing the New World Order – Who are the “Jews”?

Who are the Children of Abraham?

The Brain Myth: Your Intellect and Thoughts Originate in Your Heart, Not Your Brain

Fact Check: “Christianity” and the Christian Religion is NOT Found in the Bible – The Person Jesus Christ Is

Christian Myths: The Bible does NOT Teach that it is Required for Believers in Jesus to “Join a Church”

Exposing Christian Myths: The Bible does NOT Teach that Believers Should Always Obey the Government

Was the U.S. Constitution Written to Protect “We the People” or “We the Globalists”? Were the Founding Fathers Godly Men or Servants of Satan?

The Seal and Mark of God is Far More Important than the “Mark of the Beast” – Are You Prepared for What’s Coming?

The United States and The Beast: A look at Revelation in Light of Current Events Since 2020

The Satanic Roots to Modern Medicine – The Mark of the Beast?

Medicine: Idolatry in the Twenty First Century – 8-Year-Old Article More Relevant Today than the Day it was Written

Having problems receiving our emails? See:

How to Beat Internet Censorship and Create Your Own Newsfeed

We Are Now on Telegram. Video channels at Bitchute, and Odysee.

If our website is seized and shut down, find us on Telegram, as well as Bitchute and Odysee for further instructions about where to find us.

If you use the TOR Onion browser, here are the links and corresponding URLs to use in the TOR browser to find us on the Dark Web: Health Impact News, Vaccine Impact, Medical Kidnap, Created4Health, CoconutOil.com.